Bing Chat and Bing Chat Enterprise can interact with Office Online apps. The result is close to what Microsoft Copilot promises in M365 apps.

Bing Chat Enterprise

Bing Chat and Bing Chat Enterprise are extensions to Bing Search available at https://bing.com/ chat. Essentially, both solutions offer ChatGPT-like capabilities that are further enhanced by Bing Search.

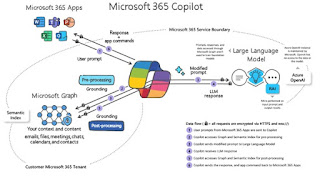

Bing Chat Enterprise differs from Bing Chat because it covers aspects of data privacy as well, and sign-in is via a Microsoft Entra ID, formerly Azure AD account. This allows the use of Microsoft Entra security features to secure and control the login. The input and generated texts are not recorded, analyzed or used by Bing Chat Enterprise to train the language models. However, Bing Chat Enterprise cannot access company data in Microsoft 365 and use it to create answers. This is only possible with Microsoft Copilot or via Azure OpenAI.

Bing Chat Enterprise is available through https://bing.com/chat and the Microsoft Edge for Business sidebar. A Microsoft 365 E3, E5, Business Standard, Business Premium, or A3 or A5 license is required to use the feature. Further details on this topic are described here: Bing Chat Enterprise - Your AI-powered chat with commercial data protection

Chat Enterprise & Office Online Apps

To use Bing Chat in combination with the M365 Office Online Apps the way via the Edget Sidebar has to be used. Details on configuration and how IT administrators can control the feature across the enterprise are described here: Manage the sidebar in Microsoft Edge.

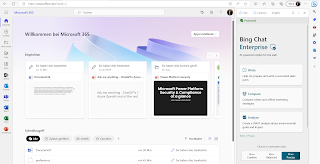

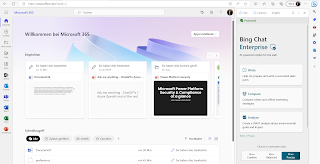

If the feature is enabled, it looks like this:

Now Bing Chat Enterprise can be used for all apps that are used in the browser.

The two features Chat and Compose are available for this. The Compose function offers detailed options to generate texts in the required context and style. Thus, tone, format and the length of the text can be defined. The following example shows the result for the question: "What can you do with SharePoint Online?", with the settings: Tone: Professional, Format: Paragraph and Length: Medium:

With the "Add to site" function, the generated texts can be transferred to the Office Online apps Word, Excel, PowerPoint, Outlook, Teams, SharePoint, etc. The text is inserted at the cursor position in the app. In the following example, the generated text is inserted directly into the chat in Teams via the "Add to site" button:

The other direction is also available. However, the "Chat" function must be used here. In the following example, the user has opened a mail in Outlook Online and wants to have the text of the mail translated into Spanish. To do this, the text must be highlighted. Next, Bing Chat automatically asks what should happen to the marked text. The user says "Please translate into Spanish" and Bing Chat can do the job.

To

make this feature available, the "Allow access to any web page or

PDF" feature must be enabled in the Bing Chat configuration using " Notification

and App Settings":