GPT = Generative Pre-trained Transformer

- G = Generative -> An output is generated

- P = Pre-trained -> The model was pre-trained

- T = Transformer

OpenAI published Chat GPT in November 2022. In June 2023, I wrote my first blog post on this topic: Next Level AI. A lot has happened in the meantime, there have been further versions and new models such as GPT-4, GPT-4o and small language models such as Phi-3. Nevertheless, it is still true that ChatGPT and therefore services such as Microsoft Copilot are not intelligent in the true sense of the word. Nevertheless, they are very helpful and that is why they are here to stay.

To ensure that the models generate the most useful results from the start, i.e. the “G” for generative, in the name GPT, they are pre-trained, i.e. the “P” for pre-trained, in the name GPT.

These models are trained using deep learning. Random values are used to generate an output. This calculated output is then checked against an output that should ideally have been calculated. The model contains a feature that can be used to return the error/deviation from the ideal result as a correction. This means that it is trained in such a way that the correct solution is now likely to be produced if the same input is used again. It is therefore transformed. The “T” in the name GPT.

Details and further information: https://en.wikipedia.org/wiki/Generative_pre-trained_transformer

According to Gregory Bateson's learning theory, this corresponds to so-called “Zero-Order”, also known as Try & Error. But deep learning sounds better 😉.

Users report that Microsoft Copilot answers them in a friendly way when they ask nicely and in a rude way when their prompt was rude. This effect can also be explained by the way this technology works. Pre-processing takes place before the prompt is sent to the LLM. Details are described here: Microsoft Copilot for Microsoft 365 overview. Something similar happens with OpenAI / ChatGPT. The prompt, as entered by the user, remains as the baseline. So if the prompt is formulated in an unfriendly way, the response will also correspond to this tenor. The orchestration / grounding has no influence on this. Quote in the context of Copilot:

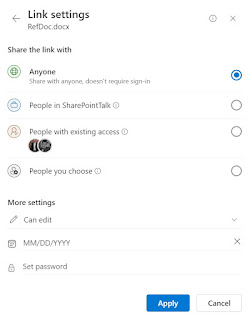

Copilot then pre-processes the input prompt through an approach called grounding, which improves the specificity of the prompt, to help you get answers that are relevant and actionable to your specific task. The prompt can include text from input files or other content discovered by Copilot, and Copilot sends this prompt to the LLM for processing. Copilot only accesses data that an individual user has existing access to, based on, for example, existing Microsoft 365 role-based access controls.

This phenomenon, that the Copilot answer is based on the language of the prompt entered by the user, is therefore not related to the next stage of learning according to Gregory Bateson, protolearning or even deuterolearning.

- Protolearning can be regarded as simple association. I learn that when I see green, I go, and when I see red, I stop.

- Deuterolearning is a learning of context. If you reverse the association, how long does it take for the organism to adapt?

Source: https://www.aaas.org/taxonomy/term/9/protolearning-deuterolearning-and-beyond

In fact, you can even tell ChatGPT and Copilot which role and style it should use. Example: Please formulate a reply to this e-mail and use a very friendly style.

Here to stay

Together with LinkedIn, Microsoft has published the 2024 Work Trend Index Annual Report. It identifies the following four key points:

- Employees want AI at work - and they won’t wait for companies to catch up.

- For employees, AI raises the bar and breaks the career ceiling.

- The rise of the AI power user - and what they reveal about the future.

- The Path Forward

Employees want AI at work

Just like the iPhone, generative AI applications are currently mostly going viral in companies. Employees are familiar with solutions such as ChatGPT or the video creator HyGen from their private lives. They have heard about them from friends or played around with them at home. HyGen's claim sums it up: “In just a few clicks, you can generate custom videos for social media, presentations, education and more.”

Unless you work in marketing or in the PR department, social media usually refers to a private context. Presentations, education and more - is the bridge to business.

And they won’t wait for companies to catch up

- I research and try new prompts

- I regularly experiment with different ways of using AI

- Before starting a task, I ask myself, “could AI help me with this?

- AI helps me be more creative

- AI helps me be more productive

- AI helps me focus on the most important work

The path to the future

- Identify the business context of a problem or challenge and then try to use AI to solve it.

- Take a top-down and bottom-up approach. Ask both your employees and the management in the company about their use cases with AI.

- Empowering employees: AI in a business context is not intuitive. Factors such as the AI Regulation / the EU AI Act, the GDPR and topics such as who has access to which information are important here.